Samah Gad’s Research Covered by the American Historical Association

The new methods of “big data” analysis can inform and expand historical analysis in ways that allow historians to redefine expectations regarding the nature of evidence, the stages of analysis, and the claims of interpretation.1 For historians accustomed to interpreting the multiple causes of events within a narrative context, exploring the complicated meaning of polyvalent texts, and assessing the extent to which selected evidence is representative of broader trends, the shift toward data mining (specifically text mining) requires a willingness to think in terms of correlations between actions, accept the “messiness” of large amounts of data, and recognize the value of identifying broad patterns in the flow of information.2

The new methods of “big data” analysis can inform and expand historical analysis in ways that allow historians to redefine expectations regarding the nature of evidence, the stages of analysis, and the claims of interpretation.1 For historians accustomed to interpreting the multiple causes of events within a narrative context, exploring the complicated meaning of polyvalent texts, and assessing the extent to which selected evidence is representative of broader trends, the shift toward data mining (specifically text mining) requires a willingness to think in terms of correlations between actions, accept the “messiness” of large amounts of data, and recognize the value of identifying broad patterns in the flow of information.2

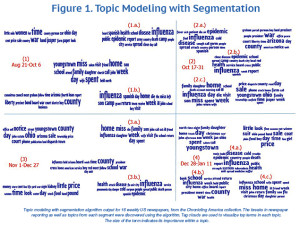

Our project, An Epidemiology of Information, examines the transmission of disease-related information about the “Spanish flu,” using digitized newspaper collections available to the public from the Chronicling America collection hosted by the Library of Congress. We rely primarily on two text mining methods: (1) segmentation via topic modeling and (2) tone classification. Although most historical accounts of the Spanish flu make extensive use of newspapers, our project is the first to ask how looking at these texts as a large data source can contribute to historical understanding of this event while also providing humanities scholars, information scientists, and epidemiologists with new tools and insights. Our findings indicate that topic modeling is most useful for identifying broad patterns in the reporting on disease, while tone classification can identify the meanings available from these reports. Read more.