Sanghani Center Student Spotlight: Shengzhe Xu

Shengzhe Xu chose to pursue a Ph.D. in computer science at Virginia Tech because the Sanghani Center offered him the opportunity to investigate cutting-edge challenges of academic importance and find ways of applying these methodologies to tackle real-world problems.

“What I like best about the center is that everyone is encouraged to pursue their own areas of interest,” said Xu, who is advised by the center’s director, Naren Ramakrishnan. “As students in this free scientific research environment, we just need to concentrate on improving ourselves and conduct in-depth research on the topics we choose.”

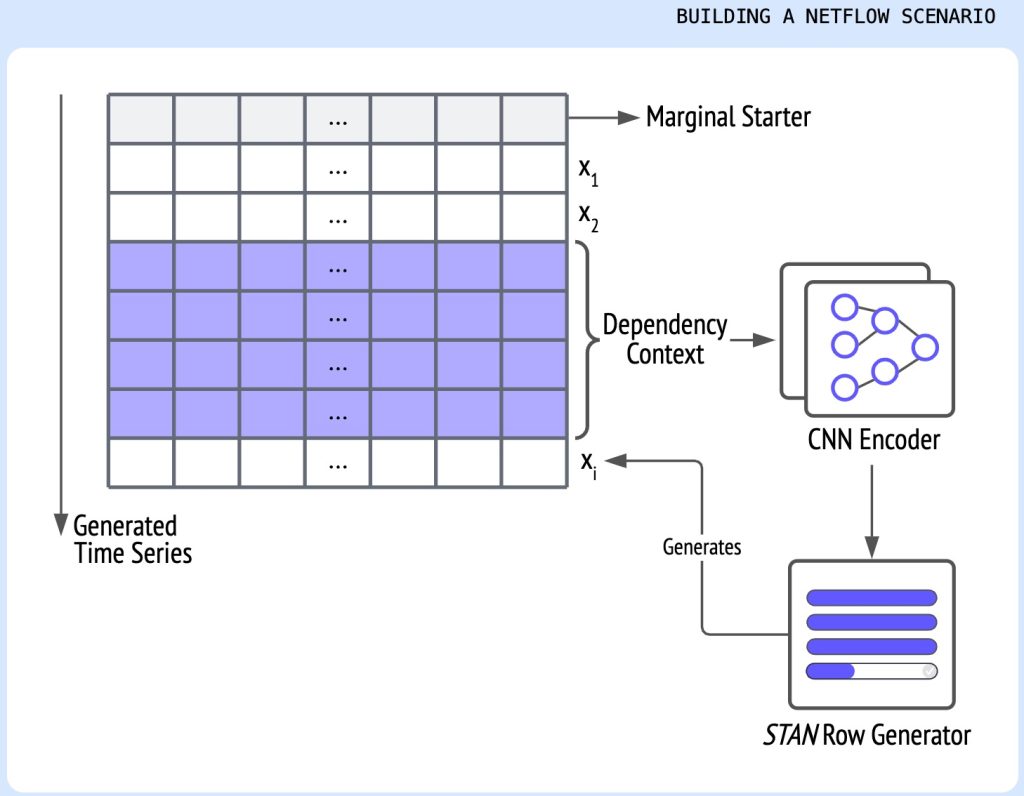

Xu’s work explores semantic analysis of tabular data as well as synthetic tabular data generation. “A real-world example of this is network traffic data,” he said. “Every operation on the Internet is recorded like a footprint that we can model by using deep learning methods.”

But capturing the semantics of tabular data is a challenging problem. Unlike traditional natural language processing and computer vision fields, the overall portrait of tabular data is difficult for humans — even if they are domain experts — to judge because it has complex dependencies that need to explored in depth.

“Deep learning models have achieved great success in recent years but progress in some domains like cybersecurity is stymied due to a paucity of realistic datasets. For privacy reasons, organizations are reluctant to share such data, even internally,” he said. “In order to protect the privacy of training data from being leaked, it is important to explore how to generate good enough tabular data in terms of both training performance and privacy protection.”

Xu presented his work on “STAN: Synthetic Network Traffic Generation with Generative Neural Models” at the MLHat Workshop on Deployable Machine Learning for Security Defense during the 2021 SIGKDD Conference on Knowledge Discovery and Data Mining. The paper explored synthetic data generation in real-world network traffic flow data to protect any sensitive data from data leakage.

Projected to graduate in 2024, Xu hopes to continue his research as an industry professional.